Abstract

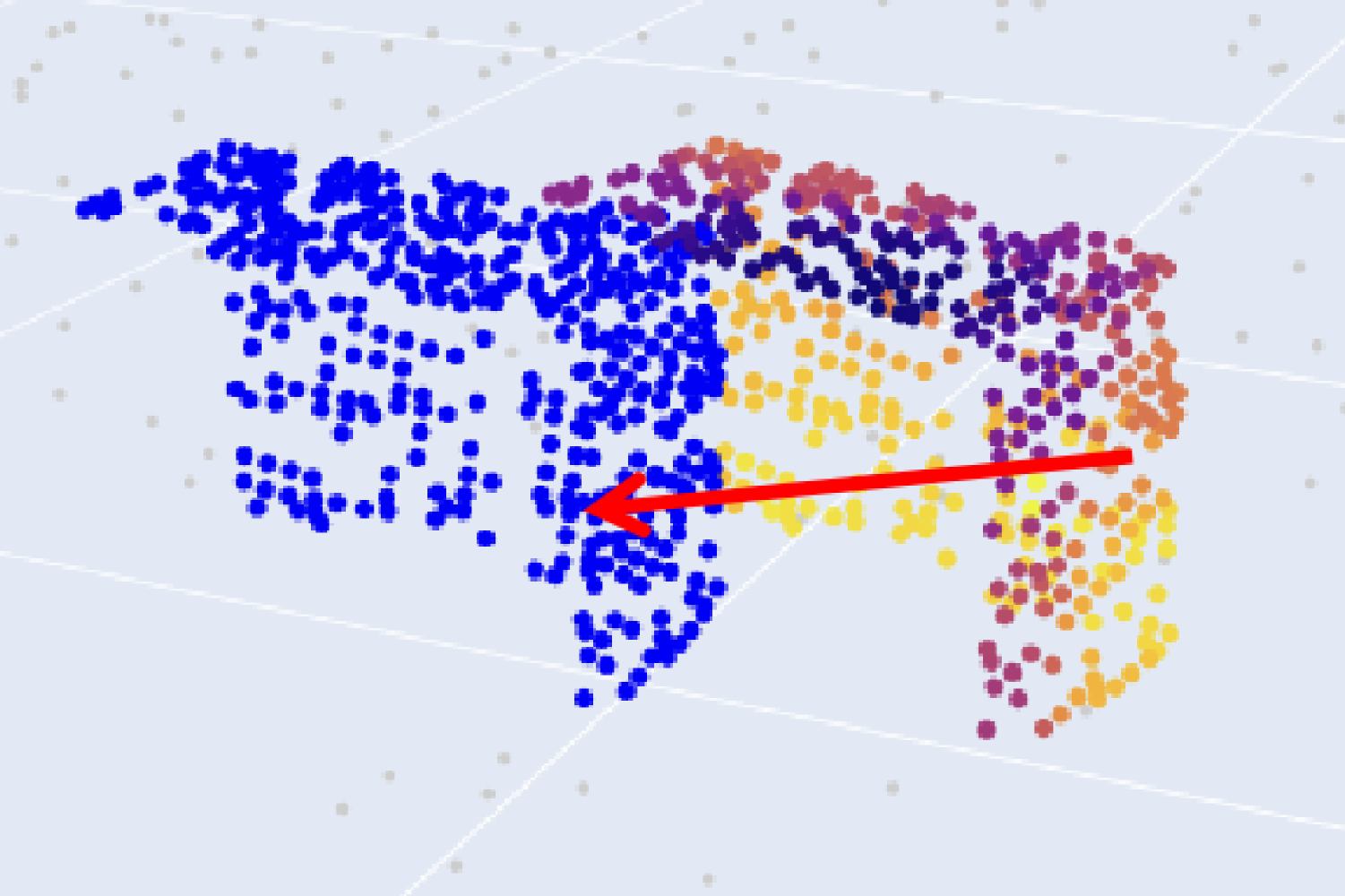

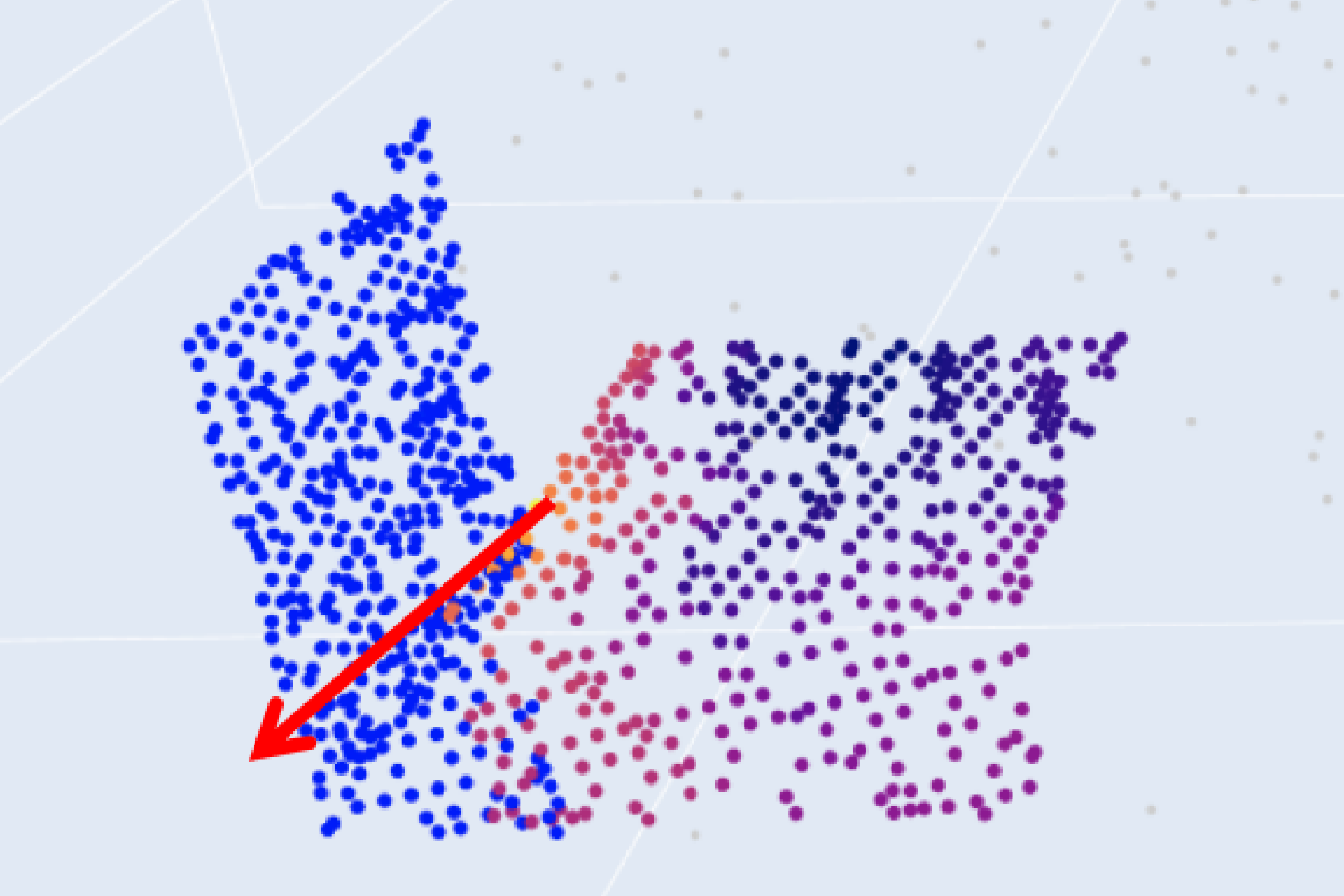

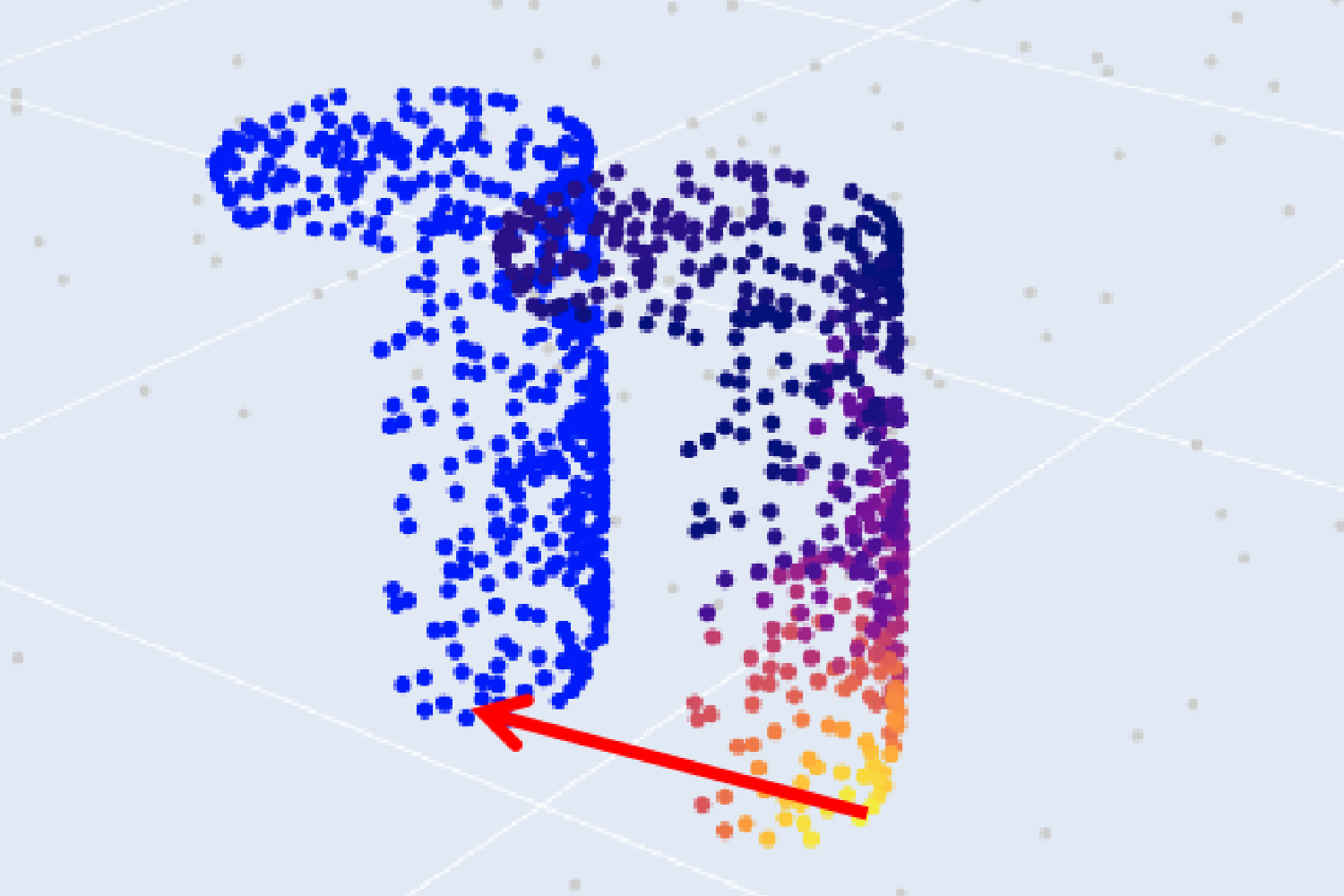

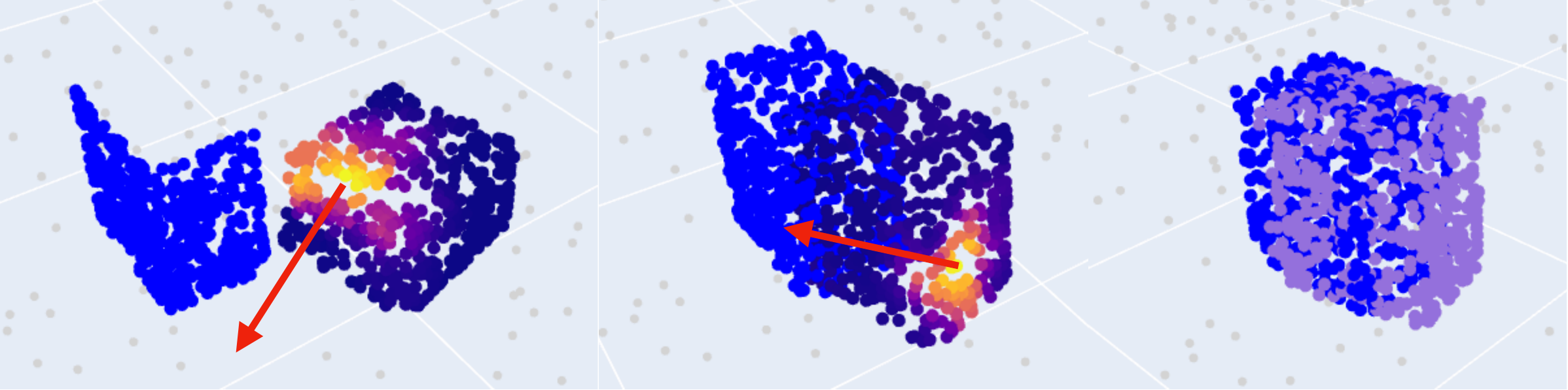

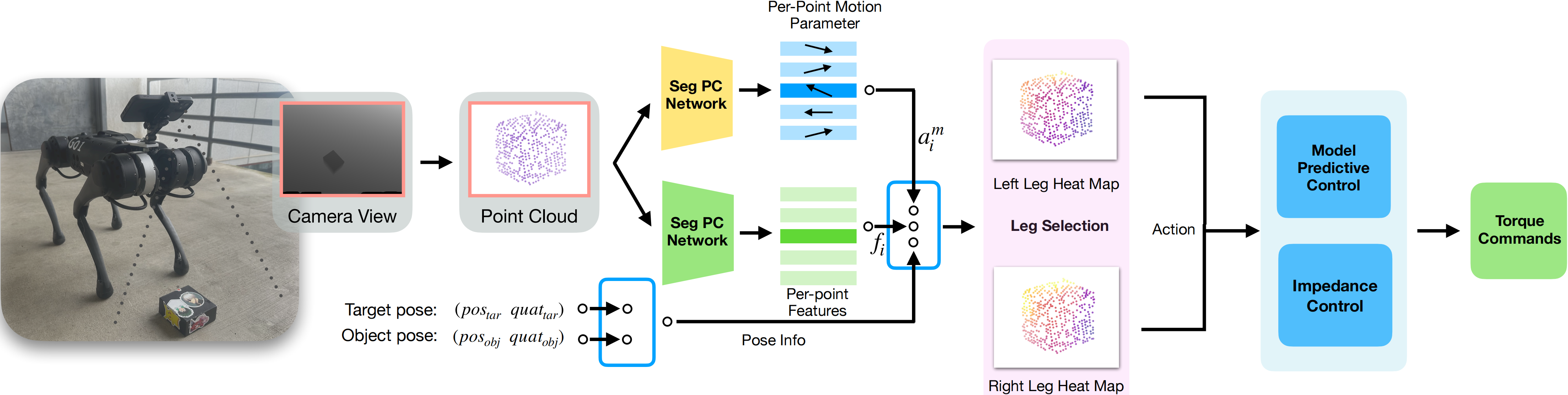

Animals have the ability to use their arms and legs for both locomotion and manipulation. We envision quadruped robots to have the same versatility. This work presents a system that empowers a quadruped robot to perform object interactions with its legs, drawing inspiration from non-prehensile manipulation techniques. The proposed system has two main components: a manipulation module and a locomotion module. The manipulation module decides how the leg should interact with the object, trained with reinforcement learning (RL) with point cloud observations and object-centric actions. The locomotion module controls the leg movements and body pose adjustments, implemented based on impedance control and Model Predictive Control (MPC). Besides manipulating objects with a single leg, the proposed system can also select from left or right legs based on the critic maps and move the object to distant goals through robot base adjustment. In the experiments, we evaluate the proposed system with the object pose alignment tasks both in simulation and in the real world, demonstrating object manipulation skills with legs more versatile than previous work.

System Overview

Visual Manipulation with Legs: The operation of our system is divided into several distinct stages 1) A point cloud of the object is obtained through a depth camera mounted in front of the robot. 2) Object point cloud and transformed target pose are passed through our network. 3) The manipulation leg is selected by comparing the maximum Q-value. 4) The pre-contact, contact, and action information is sent to the Low-level control System. 5) The control system directs the Robot to conduct the action with the selected leg through impedance control while the Model Predictive Controller maintains the balance of the non-manipulation leg, the computed torques are sent to the robot.